DrivingStereo

News

2020-09-20: For the test dataset, the full-resolution data releases.

2020-01-23: Calibration files release.

2020-01-22: 2000 selected frames with different weathers release.

2019-11-12: Testing dataset releases.

2019-11-10: Training dataset releases.

2019-06-13: Demo images release. Download link

2019-06-08: Our paper makes public on open access. Paper link

2019-05-04: Demo video is uploaded to youtube. Video Link

Download

Google Drive and Baidu Cloud links are available for the downloading of left images, right images, disparity maps, and depth maps. The total number of our dataset is 182188, where the training set has 174437 pairs and the testing set has 7751 pairs. For convenience, we compress the images by sequences. Different from the original resolution reported in the paper, all of the images and maps are downsampled to the half resolution. The average size of image is 881x400. In addition to the sequential training data, we also select 2000 frames with 4 different weathers (sunny, cloudy, foggy, rainy) for specific requests.

As KITTI stereo dataset, both of the disparity maps and depth maps are saved as uint16 PNG images. The disparity value and depth value for each pixel can be computed by converting the uint16 value to float and dividing it by 256. The zero values indicate the invalid pixels.

Training data

| Data Type | Google Drive | Baidu Cloud |

|---|---|---|

| Left images | Download | Download (Extraction Code: ijyc) |

| Right Images | Download | Download (Extraction Code: bc4k) |

| Disparity Maps | Download | Download (Extraction Code: ma6a) |

| Depth Maps | Download | Download (Extraction Code: njpc) |

The training dataset contains 38 sequences and 174431 frames. Each sequence is compress into an individual zip file which can be downloaded from above links. The attributes of each sequence are given in our supplementary materials.

Different weathers

| Weather | Google Drive | Baidu Cloud |

|---|---|---|

| Sunny | Download | Download (Extraction Code: xh86) |

| Cloudy | Download | Download (Extraction Code: 7iqh ) |

| Foggy | Download | Download (Extraction Code: 5k5b ) |

| Rainy | Download | Download (Extraction Code: 1rrd ) |

For specific requirements (image dehaze, image derain, or image restoration), 2000 frames with different weathers (sunny, cloudy, foggy, and rainy) from sequences are selected, where each class of weather contains 500 frames. In addition to half-resolution data, we also release full-resolution data for these frames. Different from half-resulution disparity maps, the disparity value for each pixel in the full-resolution map is computed by converting the uint16 value to float and dividing it by 128.

Testing data

| Data Type | Google Drive | Baidu Cloud |

|---|---|---|

| Left images | Download | Download (Extraction Code: kf56 ) |

| Right Images | Download | Download (Extraction Code: b6r7 ) |

| Disparity Maps | Download | Download (Extraction Code: qerd ) |

| Depth Maps | Download | Download (Extraction Code: ax7f ) |

The testing dataset contains 4 sequences and 7751 frames. In addition to the half-resolution data, the full-resolution data is also provided for evaluation.

Calibration Parameters

| Data Type | Google Drive | Baidu Cloud |

|---|---|---|

| Train | Download | Download (Extraction Code: vfcf ) |

| Test | Download | Download (Extraction Code: 88fp ) |

The format of calibration files is similar to KITTI. We provide the calibration paramters for both half-resolution and full-resolution images.

Overview

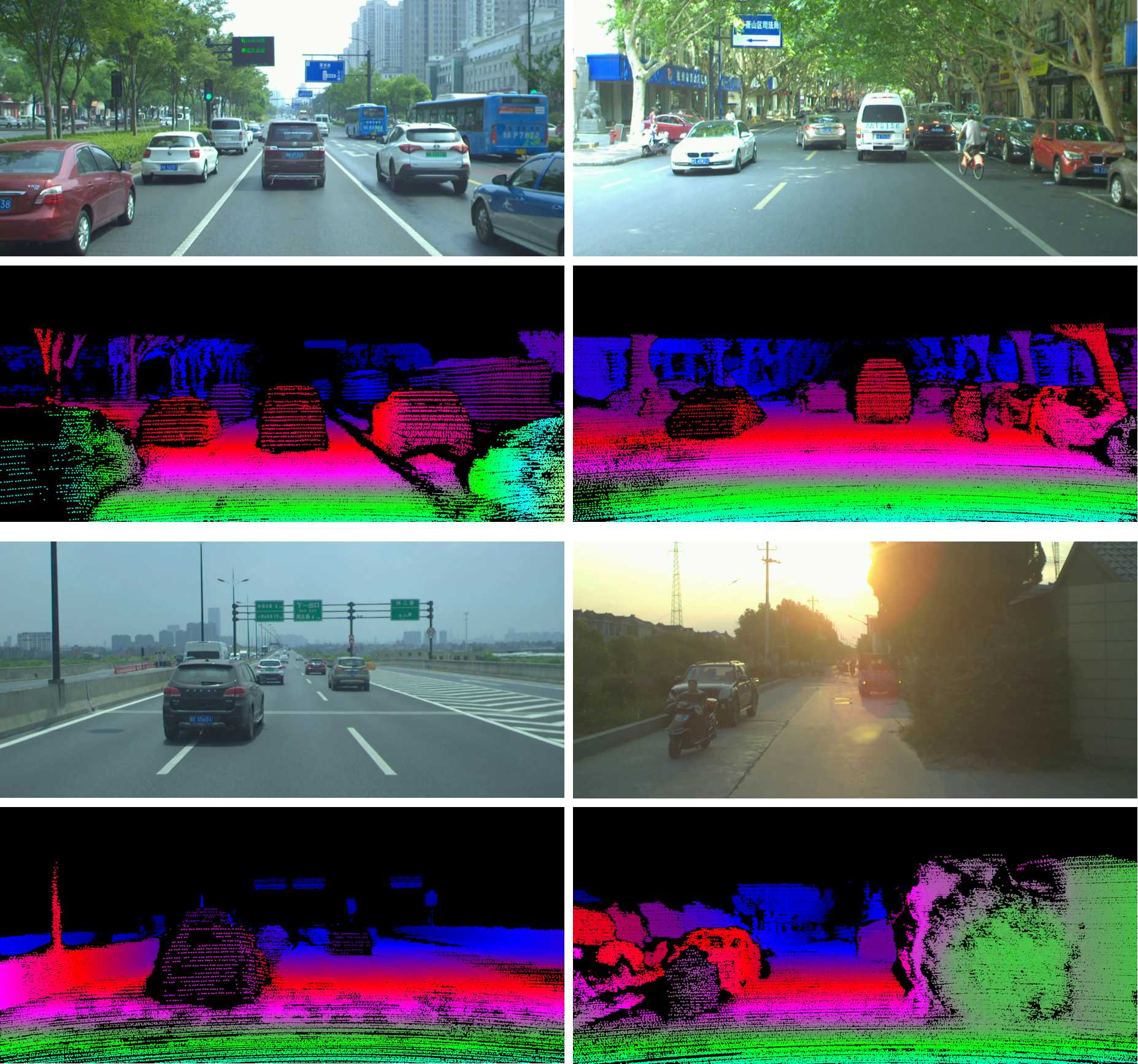

We construct a large-scale stereo dataset named DrivingStereo. It contains over 180k images covering a diverse set of driving scenarios, which is hundreds of times larger than the KITTI stereo dataset. High-quality labels of disparity are produced by a model-guided filtering strategy from multi-frame LiDAR points. Compared with other dataset, the deep-learning models trained on our DrivingStereo achieve higher generalization accuracy in real-world driving scenes. The details of our dataset are described in our paper.

Authors

Guorun Yang, Xiao Song, Chaoqing Huang, Zhidong Deng, Jianping Shi, Bolei Zhou

Contact

yangguorun91@gmail.com, songxiao@sensetime.com

License

This dataset is released under the MIT license.

Citation

If you use our DrivingStereo dataset in your research, please cite this publication:

@inproceedings{yang2019drivingstereo,

title={DrivingStereo: A Large-Scale Dataset for Stereo Matching in Autonomous Driving Scenarios},

author={Yang, Guorun and Song, Xiao and Huang, Chaoqin and Deng, Zhidong and Shi, Jianping and Zhou, Bolei},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2019}

}